About

Android, Linux, FLOSS etc.

Code

My code

Subscribe

Subscribe to a syndicated RSS feed of my blog.

Mon, 13 Nov 2023

I wanted a smartwatch to see my heart beats per minute, number of steps walked and so forth. I also wanted one where the software and complementary apps were or could be free and open source. A smartwatch running WearOS (or alternatively AsteroidOS) would fit that bill. It also would run a variant of the Android operating system, which I am familiar with. Another alternative is the PineTime smartwatch, which is really geared to a free and open source audience, and which was only $27 plus shipping. I could have bought a dev kit which costs about the same amount, but at this point did not. Theoretically I could write my own OS for the smartwatch if I had the dev kit, but for now I am running the default InfiniTime OS for the watch.

I ordered the watch two weeks ago and it was just shipped to me Friday. It is running InfiniTime 1.11.0 from October 16, 2022. It does not yet run InfiniTime 1.13.0, released June 24 of this year with an improvement in heart rate processing. InfiniTime is GPLv3, and I was reading through the 1.13.0 heart rate improvement and that code is written in C++.

The watch came with a charger, so I charged it via the USB plug and dock. It was suggested that Gadgetbridge, Siglo and WatchMate would interface with the watch, so I put Gadgetbridge on my Android phone, and Siglo and WatchMate on my Linux.

The watch was sending battery rates out to my Android and Linux box via Bluetooth. It also sent number of steps made. It was not sending heart rate. However when I went into the watch and asked for heart rate to be measured - it began measuring it.

I wanted to get an idea of my resting heart rate, my heart rate when moving around a little, and my heart rate when I go to the gym and get on the treadmill. The BPM floats around a bit, with some peaks when resting and valleys when moving quickly on the treadmill, so I even those out in my head as probably error. On the whole, increased activity tends to increase average heart rate. On the treadmill, I now have a better idea of what incline and speed is 60% of maximum BPM, 65%, 70% etc. This was my first time with the watch, I will probably keep pushing a little and seeing what some higher rates are, but I am not currently planning on doing a lot of HIIT training in the near future. Just enough to keep me healthy and maybe lose a few pounds.

After having F-droid download Gadgetbridge and pairing it with my app, I have been sending battery, step, and heart information to the app. I have also been sending it to Siglo and Watchmate on my Linux. I have not used Flatpak with Linux a lot, but those two apps I got and used with Flatpak. They are OK, although Gadgetbridge has been more of what I am looking for out of the box.

This gives me a better idea of how much cardiovascular exercise effort I should be making at the gym, and if I use it along with an exercise regiment, should keep me healthier, as I know better how far I can push my exercise. The watch and related apps are open-source, so I have more control over all of this information, and can make changes to the watch and auxiliary applications if I want to.

Thu, 16 Mar 2023

I have been using GPT-3, and GPT-4 just came out. Finding this interesting, I downloaded LLaMA. I have LLaMA on my desktop. There is a gradio, Automatic1111-like text generation web UI that lets large language models be targets. Normal LLaMA does not work for my desktop's Nvidia RTX 3060 with 12G VRAM, so I got 4 bit models, which may be substandard, but what can I do? LLaMA comes in different parameter sizes: 7B, 13B, 30B and 65B. My Nvidia card can handle 13B parameters, but my Nvidia card conks out when trying to do the 30B parameter one. The model is 25 gigs large. Reminds me of how Marvin Minsky used to say that when AI is really figured out, it will be able to be run on a typical home PC.

So with the text generation web UI I am now talking with LLaMA, which is more interesting than talking to Eliza.

Common sense questions and answers

Question: How many legs does a dog have?

Factual answer: 4

Question: What does the German word "unbehagen" mean in English?

Factual answer: It means discomfort, unease.

Question: Who wrote a letter to the Corinthians circa 53 AD?

Factual answer: Paul of Tarsus.

Question: What is 22+33?

Factual answer: 55

Question: What is the astrological sign of someone born March 16?

Factual answer: Pisces.

Question: Who designed the movie poster for the movie "Vertigo"?

Factual answer: The designer was Bill Gold.

OK, finally got him. Saul Bass designed the movie poster for Vertigo (Bill Gold did design the posters for other Hitchcock movies, like "The Wrong Man" though). What does ChatGPT say (I am pointed to GPT-3, not paying for GPT-4 currently).

Who designed the movie poster for the movie "Vertigo"?

The iconic movie poster for the film "Vertigo" was designed by the

renowned graphic designer Saul Bass. Bass was known for his innovative

and memorable movie posters, as well as his work in title sequences and

corporate logos. The poster for "Vertigo" features a stylized image of a

woman's face and swirling, vertigo-inducing shapes, which perfectly

captures the mood and themes of the film. The poster is considered a

classic of graphic design and is still widely recognized and admired

today.

Thu, 13 Oct 2022

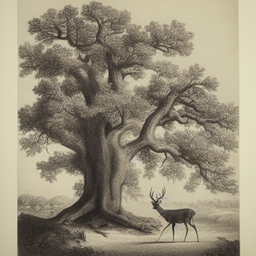

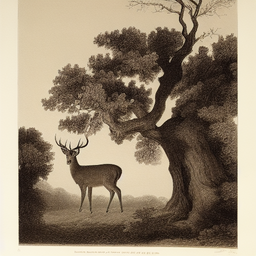

On August 31st, Stable Diffusion was released. It is a computer program where you tell it what image to create and it creates it. So you say "tree" and it will give you a picture of a tree. You say "oak tree" and it will give you a picture of an oak tree. You say "oil painting of an oak tree" and you will get an oil painting of an oak tree. You say "lithograph of an oak tree" and you will get a lithograph of an oak tree. You can say "lithograph of an oak tree and a deer" and get this -

You can ask for it to give you some alternatives, in which case you can also get another try at it like

It is pretty simple to use - you tell it what image you want to see and it will attempt to give you that image.

It can do other things as well - if you like part of a picture it draws, but not another part, you can erase the part you don't like ("inpainting") and tell it to try something else. If you like a picture but want to see the surrounding envisioned scenery, you can ask for that as well ("outpainting"). If you give a text prompt and ask for some alternative pictures, and like one particular one, you can ask to make more pictures to look like that one. You can also do all of these things - inpainting, outpainting, image-to-image - with already existing pictures it did not create.

Stable Diffusion was released for free, and the source of the software is available (as are the pre-trained weights of its model). I am running it on my desktop at home. It can be run on a server on the Internet as well (hopefully one that has one or more GPUs).

Programs like this have been developed by other places in the past two years - DALL-E by OpenAI, Imagen by Google, Midjourney. There has been some limited access to them as well, although sometimes only by invite. Stable Diffusion just dropped this so that everyone can use it, and I am sure it will speed the adoption of deep learning.

AI

To go a little back into the history of all of this - in 1956 John McCarthy, Marvin Minsky, Claude Shannon and Nathaniel Rochester held a workshop on "artificial intelligence" for a study - "The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it."

Around this time, two schools of thought arose on how to do artificial intelligence - symbolic AI and connectionist AI. One idea in symbolic AI is that there are facts, and with a set of facts, one can use logic to come to conclusions. Symbolic AI is fairly understandable - you can understand and explain how a computer reasoned about something.The other school of thought in AI was connectionism. The idea in connectionism was to model the computer on the human brain - our brain is neurons which are connected, and connectionist AI created artifical neurons and wired them together. Connectionism has a focus on learning - data is sent through the neural network, and can be "remembered" later. Stable Diffusion is more in the connectionist school, which is the machine learning, deep learning school.

The connectionist school had some successes in the early days, but the 1969 book Perceptrons pointed out flaws in some things being done back then, which may have caused interest in connectionism to wane somewhat, and the success of some GOFAI type expert systems in the 1980s also favored that school over connectionism. However for the past ten years the connectionist deep learning school has seen a resurgence, and is in use at most of the big tech companies, and Stable Diffusion is part of this trend.

Stable Diffusion

Stable Diffusion uses a model to do what it does, and it cost about half a million dollars to train this model. The model is 4 gigs large on my computer, and it's amazing what can I can generate on my home computer with that 4 gig model. It's truly mind-blowing, and gives some insight into how the brain works and what computers will be able to do in the future. Stable Diffusion generates a lot of great stuff, but has trouble trying to generate some things (like a sign that says "WARNING" or something like that, or human faces in some cases). As time goes on, it an other systems will just get better.

You can download Stable Diffusion to your computer, but I know what I'm doing and had some difficulty getting it working. People are at work to make it simpler to do. You do need a decent graphics card. There are also forks of Stable Diffusion which may be simpler to install, or offer more features. If you think you can handle it, you can get Stable Diffusion here.

Probably easier is to just go to a website that takes text prompts and generates images, like Stable Diffusion web Dezgo Night cafe

Tue, 16 Nov 2021

Android - compiling and sideloading it onto an Android device

In 2008, Google came out with a phone with a free and open source operating system, which ran on the showcase G1 phone. I soon began to learn how to program applications for Android.

That the operating system was free and open source, and that I could theoretically compile the Android operating system on my computer and sideload it onto a phone was a very exciting prospect for me. Nonetheless, due to lack of time, as well as various roadblocks (including ignorance about how to do it), I never actually sideloaded Android onto an Android device, I always used the Android OS installed by the manufacturer.

In July of last year I took another stab at it. Over three weekends I dove into the subject. I learned a little about boot unlocking, fastboot, and LineageOS. I bought a Samsung tablet, primarily so as to sideload onto it as there seemed to be a bootable image of it, and I could buy it at Best Buy. But then I learned that Samsung did not use Android fastboot like most Android devices, it used something called Odin. After three weekends I became busy with other things and put the whole thing off.

Work entered a slack period and I had some time off, so I decided to make another go at it. Android's source code is made available via the Android Open Source Project (AOSP), but they don't provide a lot of support to non-vendors, and compiling straight off of Google is daunting for someone without much experience with this. There are third-party projects, based mostly on Android, which help people load an Android-like system on their Android devices. They provide a little more hand-holding, and support for random people compiling Android source code and sideloading it onto devices. LineageOS is one of the most popular of these, so I decided to use LineageOS.

Last year I got stuck with an Android device I couldn't sideload to, so this time around, I wanted to make sure I got one that I could sideload onto. I wanted one officially supported by LineageOS, not one I would have to futz with to install with LineageOS. I also wanted one I could buy at Best Buy or the like. Having both of these conditions as true is difficult, as it takes a while for an Android device to be officially supported by LineageOS, yet Best Buy tends to sell newer Android devices. By the time a device is officially supported by LineageOS, Best Buy is often not selling it any more.

As Samsung did not use the standard fastboot, Samsung was off my list. Also, I wanted a device officially supported by LineageOS, but that was still being manufactured. With that in mind, there were very few tablets that could be used. The ones theoretically available looked a little difficult to get a hold of in the time I had. Most of what was available were phones. I would have preferred a tablet at this point, but phones were most of what was available, so a phone it would be.

Most of what was non-Samsung, and that had LineageOS official support, and which I could pick up at nearby Best Buy's were Motorola phones. Including a $149 Moto G Play at a Best Buy outlet. I drove there and was there when it opened on a Saturday. I asked a woman for the Moto G Play. She could not find it in the rack. I then asked for two other Motorola discount phones. She could not find them either, nor in the back. The next best thing was a discount Motorola Edge 5G phone at another Best Buy. I drove there and they had it and I bought it. Box price was $700 but I got a couple of hundred off.

So I turned on the device, then turned on developer options. Then I allowed USB debugging and OEM unlocking for the phone.

I am using a desktop running Ubuntu 21.10. I add myself to the plugdev group on my Ubuntu 21.10 desktop and add some udev rules. I get a response for "fastboot devices", and "getvar version" works when the phone is in the recovery mode. Now I want to unlock the phone. I try on my Ubuntu 21.10 desktop to do "fastboot oem get_unlock_data" but it does not go. So I boot up a Macbook and I follow the instructions to do it on the Macbook and I get the unlock data. I then send the data to Motorola on the web and they send a key back when I agree to void the warranty. So I enter the key and now the phone is unlocked.

Speed is the key here, and unlocking works on the Macbook but not the desktop, so I just proceeded with it on the Macbook instead of investigating. I probably won't unlock this device again, but if I unlock another one I can look into it more. I now turn off the Macbook and boot up my laptop running Ubuntu 21.04 and work off of it. I may have been able to have gotten the oem data and unlocked the phone from my System76 Ubuntu 21.04 laptop, but I did not try.

So on my System76 Ubuntu 21.04 laptop I download the LineageOS Edge (racer) recovery image, copy partitions zip, and the latest LineageOS nightly build for racer (Moto Edge). I fastboot flash the recovery image onto the Edge. I turn the phone on and off and - I see the new recovery screen. I then sideload the copy partitions zip. I then sideload the LineageOS nightly build. I go back to reboot system on the recovery screen. LineageOS boots! I set it up. Cool. Wifi works. Camera works. I turned USB debugging on in settings and shell in on ADB. It works. Cool!

OK next step - I want to compile LineageOS myself. I install the suggested packages on my Ubuntu 21.10 (similar to AOSP suggested packages). I follow the instructions, then do a "repo sync" which says it will take a while - and it takes a few hours.

So the repo synced. Now I specify I am building for the Moto Edge (racer). In terms of doing this simply and step-by-step, I probably missed an overall step here, and that would be to compile LineageOS for a standard AVD/emulator, without worries of firmware, unlocking and so forth. I could have seen if I could compile the Android OS for an Android emulator, and then dealt with specific hardware. I could have done that before buying the Motorola Edge.

So my build fails. Ubuntu 21.10 was released fairly recently, and it uses glibc 2.34, which this racer/Edge branch of LineageOS (and AOSP?) may have trouble with.

So I start the build process over on my laptop running Ubuntu 21.04. The Edge (river) had payload based OTAs in its A/B partitioning system, and I extract them. So i keep going and on my laptop - LineageOS does compile. My build of the recovery image seems to have problems, so I flash the stock one I downloaded. But the LineageOS image I built on my laptop and sideloaded does go on successfully. I can even go to my settings build number and see the build. So I built my own Android OS and sideloaded it onto the phone. Yay!

So that was enough progress for the down time that I had. Next steps - turning the phone on with T-Mobile (Sprint) if they do that. That would cost money though. I extracted proprietary blobs from the phone, so putting Google services back on might be something I do, if it is allowed. Figuring out if my Ubuntu laptop or desktop can unlock Motorola phones (did not try with the laptop). Modifying things in the build source code and reloading the operating system. Seeing if this can build on the 21.10 desktop. If not directly, then building with a 21.04 KVM on the 21.10 desktop initially. Building not just the OS image but the recovery image.

Also, a look at the Linux drivers and such for the parts of the phone (camera, screen, microphone, speaker etc.) is interesting, but as I am still so unfamiliar with all of this, that might be overreaching. Doing it with my desktop might be easier, but things like these Android devices are where the bleeding edge is.

Any how, I finally got to build and sideload the Android operating system with the LineageOS modifications. The LineageOS instructions say "It's pretty satisfying to boot into a fresh operating system you baked at home :)" and that's kind of how I feel.

Tue, 30 Apr 2019

So 7, almost 8 years ago I released my second Android app, which was able to load Microsoft Access databases on Android. The first release was four days from idea to release. I improved it over time, and then stopped working on it once people seemed happy enough. Eventually it got a real competitor or two, and I left the field to them, unpublishing the, by then, out of date app.

Well as I noted two days ago, after 7, almost 8 years, I got past my second major hurdle in my spreadsheet app that I have been working on, on and off, for over seven years. But there is a lot of work to be done, and I may have bitten off more than I can chew, especially since I am doing this solo, and am busy with other things as well. I would need to put in more features before I thought of releasing my spreadsheet app on Google Play.

The spreadsheet framework I did is one that works well not just for a spreadsheet app, but for an Android database app too. My spreadsheet framework fixes many problems people had complained about with the old database app I had done, like ability to scroll.

There are some good Android spreadsheets around (although there are not many Android spreadsheet apps despite their popularity - probably due to the complexity of making an Android spreadsheet), which have a number of good features which my spreadsheet app does not.

However, for my new updated database app, which now uses my spreadsheet framework, even at this early stage, there are only about two real competitors. My updated database app is already at a stage where it is competitive with them. I have features their apps do not have, and they have some features my app does not have. I will be working to improve the app.

The database app makes a good test of the spreadsheet framework. That is the main reason for the database app release - to put it the spreadsheet framework out there and have real users bang on it and see if any problems develop that needs to be fixed, see if they make suggestions and so forth. Best to implement fixes and features in response to real world usage.

The app can be found on Google Play.

Sun, 28 Apr 2019

By June 17, 2011, I had published two Android apps - an app to verify US driver's license (the idea was suggested to me), and an app that could open Microsoft Access databases on Android. The database app had successfully leveraged an existing FOSS Java library, so when I was casting about for my next app, I saw that there was a library (POI) that could handle Microsoft spreadsheets. With what I now realize was enormous hubris, I decided that a spreadsheet would be my next Android app.

I wanted to make sure the library worked, so I did a simple app where I load an XLS spreadsheet and displayed some information in the console. It worked! Great! I assume XLSX will work, so I spend the next two weeks completely focused on building out the Android UI etc.

After two weeks of doing UI work, I decide to try to load some XLSX files. Uh-oh. Including all the libraries needed for that makes the Android Dalvik Executable file exceed 2^16 (65536) methods. And Android Dex files only have a 16 bit identifier for methods at that time. I do not know this, I find out the hard way (and the error message at the time was pretty obscure).

I spend some time trying to get around this and read about it, and finally throw in the towel, post the code on Github, and move on. Which was not a bad idea - I was new to Android, Android had not developed as a platform, and I released other apps which made me tens of thousands of dollars in profit.

In November 2014 Android replaces Dalvik with ART, and suddenly the 2^32 app method becomes more manageable. One thing Android programmers had been saying was that Google was suggesting use of the support library, and THAT had grown to over 10,000 methods. So Google suggested libraries that were slowly approaching a big percentage of that limit. Any how, they fixed it before it became a problem.

I was too busy for a few months to look into it, but then I jumped back into the app again. Loading XLSX now worked, cool! I spent a few months working on the spreadsheet. I was playing around with content providers at the time, so I modified it to allow loading of Android system SMS messages, contacts, call logs, calendars and such. I also tinkered with hooking it up to the Jackcess library, as my old database app UI was becoming obsolete.

But then I ran into another barrier - layout. I was laying out the spreadsheet from cell A1 and calculating width and height from there. Which means jumping to say cell AAA9999 would mean a lot of calculation before the jump.

So I put the app aside for another three years and change. Then on November 24, 2018 I take a look at it again. I start from scratch working on just the layout manager part. I rewrite the app in Kotlin, and pull in what is needed from the old Java app. I work until December 14th and get stuck again on the layout manager that I have already spent so much time on. It does is now scroll to the top or left smoothly. If I scroll fast, cells do not get filled in. I also am skipping rows and columns when scrolling.

I have some time on March 19th. I know the smooth scrolling to the top or left is probably easy to fix. So I do fix it, great. Now left is skipping cell layouts, and skipping ahead for whole rows and columns, going from P and Q to say T and U, with no R or S column. I figure those are difficult, but I have the easy one done. So I make an effort to not skip rows and columns. It takes an effort - but I do it! Now just one more left - the cells not filled in. I decide to clean up the code now some though, as it is getting unwieldy. I combine functions, I name things clearly, I create enum names for clarity.

I debug it and see what is happening. This takes a while as well. Then - it works! It had been a one-off error, it only happened when the number of pixels I scrolled was exactly how many pixels of laid out cells were off-screen. Cleaning up the code helped make it easier to find the problem. Great! Wow, I thought I was just taking another stab at it, but between March 19th and April 3rd, I fixed all three errors, including the two big ones.

So from April 3rd to April 23th I pull in the features from the 2015 code, translating into Kotlin, and sometimes improving them. Then I pushed the code to Github. From April 23rd to April 27th I put in code to handle jumps, some ODS spreadsheets, ability to search, multiple sheets over multiple tabs, ability to handle incoming spreadsheet intents, and then handling XLS/XLSX row heights and column widths, as well as some other things. Some of this code was pulled (sometimes rewritten significantly from the 2015 code).

Aside from the old XLSX and Dex problem, the layout manager had been the other hurdle. Which seems solved now. Lots of the little things needed can be put in.

Right now I am concentrating on proper spreadsheet viewing. Editing and saving I am not dealing with yet. The viewing features are the priority for the project right now, not editing and saving. I thought of doing an SMS backup app, but I think Google is getting strict about that stuff now. I already have the code written to do it. I have a git branch on my workstation not pushed up that has basic save functionality, but I want it to be done right. And if there's so many viewing features to do beforehand, those take priority. Editing and saving are way on the back burner, all other features (viewing spreadsheets) are what I am doing now.

I might check out the Jackcess library again and consider putting out a modern Android app that can load Microsoft Access databases in this framework. That might make sense as the first release of this current framework of code. Right now I am busy with my day job though, so that might be a bit.

Other than editing and saving, pull requests and patches are welcome. Please read the README with regards to that.

Thu, 02 Aug 2018

Developing an Android app - Wallpapers app - part 6

This is my 6th post in a series about the Wallpapers Android app I developed that is on Google Play. The first blog posts describe how I planned it, started developing it, released it, updated it, and if you want a view of the entire development process of an app, you might want to start reading from the beginning.

This blog post is about the last version I released, where I translate it from Java to Kotlin, and from MVP architecture to MVVM architecture with various Jetpack components. The app is a free one where people can browse through possible wallpapers for their phone background and/or lock screen, and then download and set them.

In my last post I talk about how Google favored Model-View-Presenter architecture in early 2017, and in the midst of my app writing, changed the roadmap at I/O 2017 to Kotlin and (then-beta) new Android architecture components. Any how, I ignored this then and kept plowing ahead with Java and MVP without these new components for this project. I did pick up the Android architecture components (Jetpack) and Kotlin on other projects though. I was busy doing these and other things, and any how, my app stability and functionality was pretty solid any how. Any how it was a little over nine months before I dived back into the app code, rewriting it in Kotlin (and Jetpack).

The Google Android sample apps that use Kotlin and Jetpack components - the sunflower app, the Github app, and particularly Google's Reddit network paging app, were apps I studied as I learned Kotlin and Jetpack. I actually rewrote the networking paging app from the ground up, removing anything extraneous, until it was at under 1000 lines of Kotlin. I also saw how the various classes worked together as I built it.

I used this stripped down networking paging app I culled as the framework of the new Wallpapers app. The existing Java language, MVP architected Wallpapers code had components I sprinkled into this rewritten app which uses Kotlin and Jetpack. It was in June of this year I decided to rewrite the Wallpapers app in Kotlin, using modern Jetpack components like LiveData, ViewModel, Paging and so forth. I want to make the app better and keep it up to date, and I also want to hone my somewhat new Kotlin and Jetpack skills and a slightly more complicated challenge.

June 9, 2018

In Android Studio, create new Android Kotlin app on the basis of my stripped down version of Google's Reddit networking paging app. Create standard Android Kotlin skeleton. Then add ServiceLocator (no Dagger in this app yet), ViewModel, Repository. Then add Glide to load the images. Then add the RecyclerView and ViewHolders. Some of this stuff I had in the old app, which makes things easier.

June 10, 2018

Add a (Room) Dao. Add a (Retrofit) web API. Add more logic to the repository. Load the first url. It loads! Now I need to parse it. Now translate more network and database logic from the old Java MVP app to the new Kotlin MVVM app. I make good use of Android Studio's Java to Kotlin code converter, which does not always work (and even when it does, is not always exact). Work on the ViewHolder. Now load some images. They load! Oops, some of the filenames have UTF-8 and URL encoding quirks. Translate the old code that deals with that to Kotlin.

June 11, 2018

Now redo the frame. Cool, load three images in a row, just like the old app. Add the app icon. Since Android 7.1 round icons have come to the fore, I might have to think about redesigning my icon. OK, a grid of thumbnails is loading, just like the old app. On the old app I could select a thumbnail to see it in more detail, as well as find out more about it, as well as allow me to download it and, if I want to, set it as my wallpaper. So I start working on that detail page in this app. I start with loading the thumbnail from the grid in the new page with Glide.

June 19, 2018

OK now I have a detail page with an initial thumbnailed picture up top. I put in Retrofit calls to get the detail information, and some of the logic to send that state information to the UI.

June 20, 2018

I put in more logic to pull from Retrofit to the UI. I introduce data binding into Gradle, the Activity and the XML. Ah, my Dao SQL calls can be improved. Android really is getting full stack - I can use my server SQL skills locally in the Android app.

June 22, 2018

OK now dealing with asynchronous threads, LiveData etc. The database takes time to get the information, I have a LiveData object in the repository that posts the state information to the ViewModel though, so that the different parts of the app can know when the data is ready.

June 24, 2018

I make the links on the detail page clickable (to web pages). The Wallpaper class which the detail page uses has a number of String variables, and the transformations are a little kludgey, but it works. One of these variables are wallpaper categories which were missing until now, start putting it in.

June 25, 2018

OK - so now we're adding the more complex attributes of the wallpaper. We did the easy ones early, categories was yesterday, now we're adding the wallpaper's licenses. OK cool. OK, now we move on to downloading the wallpapers. Works! OK, now we set the wallpaper as a background. Works (tentatively)!

June 27, 2018

OK, so the other app opens as a three tabs, one selected at a time. So put that in. We have a grid Activity, so make it a Fragment like the other app. OK. So the first two tabs are similar, the third tab is a list of our category types for wallpapers (nature, flowers, cats, space, food etc.) So put that list in.

June 28, 2018

The old app had progressive thumbnail loading - when we go to the detail page we first load the small grid thumbnail, then we load a more detailed thumbnail over it. So we put that in. It seems simpler here, Glide probably improved.

July 1, 2018

We have been loading the recent tab, add a real popular tab which loads the popular wallpapers. I allude to how I choose which wallpapers are popular in an early blog post, although it has been refined since. The method to choose which wallpapers are popular is look through the logs and see what wallpapers have been downloaded by a unique IP, and then scoring them, but using how many days since the download as a score in an exponential decay overall score. It works pretty well, I think it puts me over all the other wallpaper apps actually in that one regard. Perhaps I'll go into more detail in another blog post.

July 2, 2018

OK so now when you click on one of the list of categories, a category page actually loads. The Retrofit logic, Room, ViewModel and UI stuff is there now. Also start sending version and language information to the (test) server, as well as the Instance ID (which we do not initially load in the main thread!)

July 3, 2018

So now the paging library deals with loads. It is a little different from my old hand-tuned code. My old code did a small initial load, so that even on slow connections, something would appear on the screen, and subsequent JSON loads were larger. Also, I did a lot of pre-loading so that scrolling went smoother. One big difference is the Android UI knew how large the grid would be in the old app, and now it does not know until the last page is loaded. So that is a factor in slowing scrolls down.

The old Java code used Java TreeMap to sort the category list for

different languages. I send a "java.util.TreeMap

I put the dev and production URLs for the REST API in a saner, central place. I improve the network error message (in the old app it was a dialog window). I upgrade various libraries.

July 6

Upgrade Kotlin 1.2.50 to 1.2.51.

July 7 - 27

OK, I had a list of to-do's, and most of them (except the simple ones to leave to the end, like bump app version number, turn on production URL, put in ads etc.) are done. Two tougher ones remain - making sure I poll the web API periodically, and keeping my place on the grid when I click on a detail and back into the grid (something other example apps like the Google Sunflower app do not do).

So for having a robust scheduled web poll time - I go down some blind alleys, like the way Google's Github app does it. It is not robust enough for me. I also run into all kinds of headaches, like Room deletions are not working for me. They do when I add onDelete CASCADE ForeignKey parameters to various Entities though.

I am storing the last web poll time in Room. Not sure if it is 100% necessary, and it may be overdoing things, but I'd rather know it was being polled then chance it not being polled. Any how, all seems OK but I do not fully understand this and should revisit it later.

July 28

The web poll code is done. Yay. Now I get to work on keeping my location in the grid.

July 29-31

Keep location in grid after clicking in detail. I do it essentially the old way I did it before, except the old way I did not do a scroll until the presenter notified the UI that the grid was populated, and now I have an observer in the UI which notifies me when the grid is populated. So it works, yay.

August 1, 2018

OK I started this about two months ago, spent about three weeks (when I had time) on web polling, and then three days on scrollToPosition in the new app. So I'm a little antsy to publish. I QA and QA things and they look OK. I make a release and send to another app, signing both the jar and the whole APK, as I want it to work with old and new devices. Things look OK so I send it up to internal testing on Google Play.

The results come back. A crash. I look. Some of the code I did an automatic Java to Kotlin translation of did not come out exactly right. I do a non-null assertion (!!) where I should not. I had a null check later in the old code, so it was working before. Any how I redo the code, QA, especially in what the changed code deals with, and upload again to Google Play internal testing. Google is still running it through its testing devices.

So we'll see how this goes. The app was fairly solid before in terms of stability. One problem users had sometimes in the old app was with the Environment.getExternalStoragePublicDirectory() call. One problem I had is I knew that call was failing but did not know why. I rolled my own network crash report system and discovered it was usually because the mkdirs() call on the object returned from that call was failing. Which I still have to figure out. Other than that, things were fairly solid.

Fri, 27 Jul 2018

Python Scikit-learn and MeanShift, for Android location app

I am writing a MoLo app (Mobile/Local) which might even become a MoLoSo app at some point (Mobile/Local/Social).

Any how, the way it works right now is it runs in the background, and if I am moving around, it sends my latitude and longitude off to my server. So I have a lot of Instance IDs and IP addresses, timestamps and latitude and longitudes on my server.

How to deal with taking those latitudes and longitudes and clustering them? Well I am sending the information to the database via a Python REST API script, so I I start with that. I change the MariaDB/MySQL calls from insert to select, and pull out the latitudes and longitudes.

The data I have is all the latitude/longtitude points I have been at over the past four months (although the app is not fully robust, and seems to get killed once in a while since an Oreo update, so not every latitude and longitude is covered). I don't know how many clusters I want, so I have the Python ML package scikit-learn do a MeanShift.

One thing I should point out is that in the current regular fast update interval for the app, I only send a location update if the location has changed beyond a limit (so if I am walking around a building, it will not be sending constant updates, but if I am driving in a car it will).

Scikit-learn's MeanShift clusters the locations into four categories. Running sequentially through the clusters, doing a predict, the first category starts with 2172 locations. Then 403 locations for category two. Then 925 locations for category three. Then another 410 locations for category two. Then 4490 locations for category one again. Then 403 locations for category four. Then 2541 locations for category one.

The center of the first cluster is about half a mile west and a quarter mile south of where I live. So I guess I spend more time in Manhattan, Brooklyn and western Queens than in Bayside and Nassau.

The center of the second cluster was near Wilmington, Delaware. The center of the third cluster was Burtonsville, Maryland.

It's due to the aforementioned properties of the app (I only send a location update if the location has changed beyond a small distance limit) that I had 2172 locations from March 12th to April 2nd in one clustrered area, and then on April 2nd - 1738 locations in two different clustered areas. On April 2nd I drove to and from my aunt's funeral in Maryland. That trip created two clusters - one in Wilmington for my drive there and back, and one in Maryland where I drove to the church, the graveyard and to the lunch afterward.

So then I have another 4490 location updates in cluster one, until those 403 updates in cluster four. The center of that cluster is Milford, Connecticut, and it revolves a trip I made to my other aunt's house near New Haven, Connecticut from May 25th to May 26th. Then it is another 2541 updates back in cluster one.

So...I could exclude by location, but I could also exclude by date, which is easier. So I exclude those three days and do MeanShift clustering again. Now I get six clusters.

Cluster one is centered about five blocks east, and slightly north of where I live. It has the bulk of entries. Cluster two is centered in east Midtown. Cluster three is centered near both the Woodside LIRR station and the 7 train junction at 74th Street. Cluster four is centered in Mineola, Long Island. Cluster five is centered south of Valley Stream, with 200 updates in three chunks. Cluster six is in Roosevelt, Long Island and only has one update.

MeanShift is good, but I may try other cluster types as well.

Sat, 23 Dec 2017

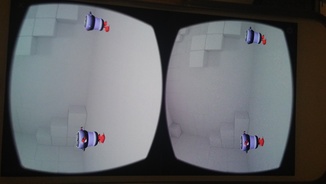

So on February 13th I bought a Pixel phone as well as a Daydream VR headset. I set up the Android Studio "Treasure Hunt" sample, and modified it slightly to change the controller behavior and other things. This was all rather new, and there was not a lot out for it. I went into the Daydream VR store and downloaded some free games (and one paid one) and saw almost all of them were made from Unity. I saw a lot was involved making VR from scratch in Android Studio, and that it was also relegated to just the Daydream VR headset, so I put it aside and worked on other things.

For most of this year I have only had an Ubuntu Linux laptop and desktop. I read several months ago that Unity had a Linux beta, but then read that it did not export scenes to Google Daydream VR. However, I have had a Mac notebook on loan since October, and I knew Mac could export to Google Daydream VR.

So on December 10th, I downloaded Unity to Linux and began looking at it. Unity has a tutorial called "roll a ball" where you make a game that rolls a ball around, picking up spinning cubes. While making the game, you're learning about the various aspects of Unity, writing short C# scripts and so forth. I finished that, and then had a nice little game. Once nice part is it was exportable to a number of OS's - Android, iOS, Linux, Mac etc. I played the game on my Linux laptop, and then played it on my Android.

Then I looked at the Google Daydream VR sample on Unity Linux. It downloaded, and I could edit the scene and preview it on Linux. Then I tried to export it to Android VR. No go. Well, Unity had warned me before I downloaded it, but I have it a try.

So I pulled out the Macbook I have on loan, downloaded Unity, downloaded the Google VR scene, and sent it to my Pixel. I put my Daydream VR headset on and, bam, I am in the scene I just compiled

I do some minor modifications, and they pop up in the scene. I program Unity, put the headset on, am in the world I just made, want to make a change, pop the headset off, back at the keyboard, put the headset on again and am in the changed scene. Very cool

At the local Android Developer Meetups is a fellow named Dario who works for HTC. He has been working with VR a lot. He thinks the interesting thing will be the building you can do within VR. One example I have seen of this is Medium, where you are molding a form together with your controller. In school I learned that one definition of an embedded system was a system that could not program itself. If you could change the world you were in from within using Daydream, Oculus, Vive etc., the scene would not be embedded.

In the XScreenSaver source code is a DXF file to build a robot, I popped it into Unity. It was way too big for the base Unity Daydream sample app scene. So I scaled it down a bit. Better.

But it was all one color. So I looked at the winduprobot.c to see what was being sent to glColor3f for various robot parts. I dropped them in as materials and now the robot was colored properly.

But the DXF was only half a robot. So I looked in winduprobot.c again and mirrored or otherwise convoluted various parts so that the body inside, body outside, leg, and arm-part would be mirrored on both sides.

So that is where I am now with it

It is pretty cool to be able to drop 3d models into the world, write little C# (or Javascript) programs for the world and so forth and have it all pop up, and to be in that world.

In the future I might look into Godot Engine which is getting some AR/VR support, or look back on the Android Studio VR modules, or into other things. Unity is a good, easy base to survey these things from though.

Sat, 19 Aug 2017

Developing an Android app - Wallpapers app - part 5

This is my 5th post in a series about the Wallpapers Android app I developed that is on Google Play. The first blog post describes how I started developing it, this is about the last few versions I released.

In early 2017, Google favored a certain type of Model-View-Presenter architecture for Android apps. Google promoted this as the way Android apps should be written.

As my last blog post notes, on April 23rd, 2017, I began refactoring the entire app to fit more into this architecture that Google was promoting. In the three weeks after April 23rd, I did a large amount of work rewriting the app in this manner.

Then on May 17th, Google I/O happened, and they announced a whole new way of architecting apps that sort of junked my last three weeks of heavy work somewhat. C'est la vie! Welcome to Android development. Also, as typical for Google, it was announced as a beta, so the production readiness of it was questionable. After talking to people and reading thoughts from Android experts, I decided to press on with refactoring to this now deprecated architecture, with thoughts of perhaps refactoring it again to the new architecture model at some point in the future.

So my previous blog post focuses on the release of this majorly refactored code on June 13, 2017. This blog post focuses on the post-release of that. First, fixing errors I saw pop up on the release of that code. Also, other improvements I have made since that release.

June 15, 2017

I update the Google services JSON for Firebase (and Admob), and upgrade Firebase to v.11.0.1. On the Admob backend, ads for the main page are distinguished from ads on the category pages, so I make the distinction explicit in the ads on the app as well. I display a Toast when a download completes successfully. I also deal with when an object comes in as null in places where I am not 100% sure why the object would ever come in as null, I have to check into that more.

June 16, 2017

Release app, release 2.7.3. I do partial releases to 1000 users at a time of the new code.

June 19, 2017

When the Android client connects to the JSON API, each client sends a unique InstanceID. The main purpose of doing this is to track down errors, if people are having problems with the app, we want to have as much information as possible in order to try to fix the problem. However, I am seeing ANR (Application Not Responding) errors, as some Android devices are freezing up while calling the Google Play Services code to get an InstanceID. So I put the (not very essential) call in an AsyncTask so that that freeze-up does not happen.

There was also a problem of network requests going out, the view/presenter being reset and sending out a duplicate network request, then the old request coming back, and then the new duplicate one. The simplest thing for me to do is to discard the old ACK, so that is what I do. I do a release and start rolling out this new version.

June 21, 2017

More nullness to deal with. Retrofit Response bodies are coming back null. Have not been able to reproduce this in QA yet. I rewrite the code to display the "network failed" dialog when this happens, and have to do more QA to see how to reproduce this problem which is happening in the field.

It was not scrolling all the way to the end of the wallpaper grid in some cases, I modified the code so that would.

In the previous blog post, I mention one thing I punted on with the big June 13th release was ranged notifies. When a JSON would return new wallpapers, I notified and refreshed the entire adapter, which made the images reload (which made the screen blink) every time a new JSON came back with new wallpapers. As June 13th approached I was getting antsy with how long the refactor and QA had taken and decided this annoying blinking was something I could live with and deal with later. As the release went out, I take a look at it now. I see that it is not that difficult to send a notifyItemRangeChanged to the adapter, so I do that. The reloading and blinking is now gone. Yay.

I also make sure some assertions are true before loading more JSONs for the recent and popular wallpaper grids.

June 22-24, 2017

I am running into one of those hairy Android problems. There is an older and newer method of sending images off in an Intent to be set as wallpapers. The problem is it is not exactly clear when the old method should be used, and when the new method should be used. For the app being sent to, which method to use can depend on not only the app version, but the Android version, and other factors. Also, this new method has problems to be dealt with as well - the old version handles things like JPG's which have filenames which end with a capitalized JPG, but the new method does not (without some rearranging any how). I don't really fix anything, but Google+ is now excluded from setting wallpapers as it only works with the new method, which I have yet to implement (outside of test functions).

June 26, 2017

Some of my competitors have a nice feature graphic for their Google Play store listing. Mine is not so great. So I put together a nice 1024x500 feature graphic. What I do is find 14 nice wallpapers which go together nicely. Then I make 146x250 thumbnails of them, which are a ratio close to that of a typical Android phone. With the exception of the 4 wallpapers on the left and right edges, which are all 147x250 size.

I never did a store listing experiment before so I do one for the new graphic. I start by doing a global experiment, but the global experiment is constricted. So I do it by language - both English and French. I run the experiment for 11 days. There are a few hundred downloads but no big statistical difference is seen. So I end the experiments and serve everyone the new graphic - it doesn't seem to have harmed anything anyway. Subsequent looks at that statistics yield very little as well, it had no major affect on download conversion in either direction.

June 30, 2017

Deal with Retrofit Response being null for detail responses, just as I had for Retrofit Response being null for grid responses on June 21st. As a preventative measure, I have the category page deal with null Retrofit responses as well, although I have not yet seen them in the wild.

July 7, 2017

Even though the images on the category page have been shrunk to 200px, they still cause OutofMemory errors on some devices. So I push handling of the image loading to Glide.

July 8, 2017

Upgrade Firebase etc. to 11.0.2

July 9, 2017

People in the wild are crashing on a null view object in DetailFragment. I put in a kludge to deal with this, but the real problem is that object should not even exist in the first place, and DetailFragment has become too spaghetti code like as it has continually accreted code to try to deal with all the various tasks it has to do (permissions, load two thumbnails, load JSON and description, download and set wallpapers). A few weeks later I will rewrite this class and make it cleaner.

July 10, 2017

Production release of new code.

July 13, 2017

Usually I am testing this code on wifi. When I test it on a cell connection, my connection is usually good. So I don't have a lot of QA from less robust areas.

In New York City there is a local Android developer meetup. I go to it and show someone my app. The cell connection is not robust though, and embarrassingly, my app has problems as I show the app to someone. The problems go away when I go home to my wifi and good coverage area.

I go to a $150-a-month co-working space I have access to, where the cell coverage is not always robust. I begin to see the problem again. When the phone is on wifi, when in the fragment's onResume I ask the connectivity manager if the network is connected, it immediately says yes. However, when the question is asked while the phone is on a spotty cell connection, the answer within the first 20 milli-seconds to whether the network is conncted is "no". Usually about <20 milliseconds in, a system broadcast comes in that the network is connected.

So now in onResume, I do a network test, wait 100 millseconds (I tack on 80 milliseconds), then do a second network test. I only listen to the results of the second test. This seems to solve the problem, I get much less false "network disconnected" messages. Another Android programmer told me I must be imagining all of this, but this is what happened for me. Perhaps his phone never has this problem.

July 16, 2017

When I show the network is disconnected dialog, I have been assuming people were always clicking the OK button. I put in code to deal with every manner in which they might dismiss this dialog.

July 18, 2017

I add the timed network connection test to the category grid.

July 19-22, 2017

I write some JUnit and Espresso tests for the app. From Android development on Eclipse to now there have been many changes, but I see that it is very easy to write tests now. It just takes a few minutes to add a JUnit test and an Espresso test and then run both.

July 25, 2017

I QA the app on an ICS (v. 4.0) tablet. Oops, the permissions for downloading are not correct. Manifest.permission.READ_EXTERNAL_STORAGE was not introduced until API 16. I redo permissions so that the small amount of devices that still come in that are v4.0 API 14 and API 15 work.

Also, a few people here and there are having IllegalStateException errors when doing a DownloadManager.Request on the setDestinationInExternalPublicDir method. There are three possible IllegalStateException's they may be having, and I don't know which one they are generally having. So I set up a method to test for this and upload data to my bug reporting server if the problem is seen. I will be looking into this more as reports come in (although so far, people have been having two of the three possible errors, pointing to different causes).

People are using new licenses on Wikimedia Commons so I add blurbs about those new licenses to the app. There are enough wallpapers in the Sky category (60) to put it into the app, so I do so and put a relevant drawable in for it as well.

Also, in unexpected behavior news, some people click the download button 10 times in a row and download the wallpaper 10 times. So now I have it download on the first click and ignore subsequent clicks.

July 26-30, 2017

More JUnit and Espresso tests.

August 1-12, 2017

Dealing with that problem with people clicking download 10 times in a row on the Detail page, I want to add more state to the Detail Fragment, but I take a look at it and see how much spaghetti code it has. Dealing with loading the existing small thumbnail, and then a larger thumbnail, dealing with grabbing and displaying meta information, dealing with permissions, and downloading and setting - the code has accreted and is now fairly convoluted. I do a JavaDoc generation of the project and look at the detail code in the JavaDoc and it is not pretty. I also manually put together a Graphviz of the DetailFragment method call graph and it is convoluted and confusing.

Instead of accreting even more functionality to an already convoluted class with a lot of spaghetti code, I decide to refactor the class. I start from scratch and cut and paste the old code as needed.

One of the first things - as I mentioned on July 9th, I was keeping the view object around in the Fragment, which was not a good idea. So I dumb that and now just getView() when I need the Fragment's View.

I also have a variety of Strings and such scattered about with information on the wallpaper images and the wallpaper metadata. I consolidate that into two classes - Wallpaper and WallpaperMetadata.

The code had just accreted and had kludges and was calling things unnecessarily. I streamline to a sensible directed graph. When the fragment resumes, I load the small thumbnail, and have the larger thumbnail laod after that. I also have another directed graph where a JSON of metadata is pulled and then displayed on the page. The third directed graph is based on the download and set buttons. If pressed, I check for the proper permissions, and based on that, download, and if requested, set the wallpaper.

This is better than the previous code, which had unneeded dependencies in the image load and the metadata load, and other unneeded dependencies. Everything is now off in its own self-contained silo of functionality.

The network failed dialog is still popping up when it should not sometimes (after onInstanceState being called for instance), so we deal with that as well.

August 13, 2017

Somone with a small, low density phone gived the app a 3 rating. I make an emulator for a phone of this type and test it out. I see the word categories on the tab appears in a font which is too large, so I decrease the font size on small, low density devices.

August 15-17, 2017

More Detail fragment refactoring. Rewrite JUnit tests for the Detail presenter, as I modified the Detail presenter as well.

On the server side, since I'm a full stack programmer [at least according to the definition I read someone give online somewhere of what a full-stack programmer was :) ], my Python script which determines which wallpapers are popular was running slow because it was taking too long to get rid of duplicates. One reason dumping duplicate IP/wallpaper downloads is important is as I am using Android DownloadManager now, downloads now are more broken up and - duplicated. I solve this problem by creating a unique set, and seeing if unique data structures are in that set or not. Any how, now it takes five seconds to process the 145,000 downloads I have, whereas beforehand it took a few minutes. I had identified the problem of uniqueness beforehand, but surprisingly it took me less than an hour to solve the problem.

Back on the Android client side, and looking at the small, low density emulator, I see that some foreign languages use fonts which are too large for the download and set wallpaper buttons. So I shrink the font sizes accordingly.

I sent out to do translations for the app, its Google Play blurb, its ads, as well as the descriptions of a few of the more popular wallpapers. The app is already in English, Spanish, German and French, I am now doing Czech, Russian, Polish, Portuguese, Korean, Italian and Dutch. Those seven languages were determined from two factors - one, the number of images in Wikimedia Commons that were in those languages, and two, the amount of ad revenue which I could generate in those countries. If the cost of a translation and a small ad campaign could be recouped within a certain time period, then I opted to choose that language. There aren't many Korean language images in Wikimedia commons, but there is so much ad revenue in Korea that I paid the $30 to translate it any how. Insofar as an ad campaign there and if I'll have enough images in that language to fit the bill, I'll deal with that when it comes up. My app's multi-language capabilities are already superior to that of some of the leading wallpaper apps.

There is not a lot to do now. The refactored version has been out for over two months and all the major bugs have been fixed, except for a few infrequent and hard to track down ones. I'll just try to keep adding three or so new wallpapers every day, as I have been doing. This will give more of a selection, and fill out the categories more. Once I reach some threshold with the wallpapers, I will put in search functionality so that people can search for the wallpaper they are looking for. That would be the next big change for the app.

So, now that necessary upkeep on this app has dwindled (hopefully) to a few hours a week for the foreseeable future, I'll start pulling out some of the other irons I have on the fire...

Tue, 13 Jun 2017

Developing an Android app - Wallpapers app - part 4

This is my 4th post in a series about the Wallpapers Android app I developed that is on Google Play. The first blog post describes how I started developing it, this is about the last few versions I released.

So I started working on this app one year and three months ago. I released version 1 one year and one month ago with 335 wallpapers. I am in the middle of a staged rollout of my most recent release, which was a fairly significant one, as I have been working on the latest release for three months without any intermediate releases since then.

The main thing I did was made the app more explicitly in line with what Google suggested. Google suggested that Android apps be built with certain architecture types. Two of the popular architecture models they suggested were MVP and MVVM. As the MVP (Model-View-Presenter) architecture was the simplest architecture they suggested, and fit with what I was doing, I went with that.

Of course, right as I was finishing up with all the work I had done following Google's then-current best practice suggestions, Google I/O happened and Google announced a whole new official architecture framework. So my app's architecture was, in a sense, obsolete before it was released. I considered dropping all my recent work and using the bleeding edge new official architecture suggestions from Google. My thoughts though were that it was yet untried, and other Android programmers felt the same.

In addition to a more explicit Google-blessed architectural model, I decided to make the app more in line with what most Android shops were doing. Although the Android Universal Image Loader library has worked well for me, it has not been updated at all in eighteen months, a long time in an Android environment where new Android versions are coming out regularly. I switched to the Glide library, as it is popular and people like it. I could just as easily have picked other popular Android image loading libraries such as Fresco or Picasso, but Glide suited my needs better.

I also changed other things. GridView went out, RecyclerView came in. I used Retrofit for JSON loading, and GSON to convert the JSON into objects.

A few things prompted these changes. One was that my method of dealing with my main data structures was not so great. Particularly in giving access to the data model all around the app. I had known that my existing methodology was problematic - but it did work.

However more of the newest Android devices (Nougat) were coming online. With my number of wallpapers growing, as well as Nougat's new constraints, I began seeing TransactionTooLarge exceptions when people scrolled down to the bottom of what were now over 1300 wallpapers.

Another reason for the major refactor is just that I had been working with MVP architecture, Recyclerview etc. in other apps and wanted to bring all of that good stuff into this app.

Any how, here is my timeline of work. As I said in previous blog posts, this is to give people some idea of what goes into programming an Android app.

From December 24, 2016 to March 30, 2017, I am just doing regular updates. From April 23rd, 2017 to now, I am redoing the app in the MVP architecture, as well as making other large changes.

December 24, 2016

These apps are fairly dependent on network connectivity. If the network is not connected, I pop up a dialog fragment. But it pops up while the activity is finishing, which it should not do. So I patch that.

January 13-16, 2017

The images I have on my categories page are larger in file size than they need to be. I shrink them down to 200px each. Also, I have brought in more wallpapers over the past months, and choose better examples than existing to illustrate each category.

March 15, 2017

My big problem on the first release is when I went out to test it and realized it did not work on Marshmallow phones due to Marshmallow's new permissions model. I had done a kludge fix for that ten months before. In February 2017 I bought a Pixel phone running Nougat. While using my app on it and doing some informal QA, I notice there is a race condition in the Marshmallow permissions code, so that it does not always take effect. So I patch that. This is why it's good to have access to a lot of devices for Android. I upload the new version with this fix to Play, which is my last app update on Play for three months (but not my last update to the app, as I am putting about three new wallpapers a day online behind the API accessible to the app).

March 30, 2017

The aforementioned network disconnected dialog fragment is being activated while the onSaveInstance method is running, which should not happen. So I disable that as well and patch it. As it happens rarely, I don't update the new code to Play. One reason is I don't release the fix is I didn't anticipate that I would still be working on the next release all the way into June. So I thought the fix would go in earlier. It is not as major as the Marshmallow fix any how.

April 15, 2017

I go down a blind alley. I try to do a kludge to fix the TransactionTooLargeException that Nougat devices are seeing. But it is not possible - some work will be needed. And since some work is needed, I might as well do it right, and do as much work as is needed.

April 23, 2017

This is the start of work that will not be published on Play until June 12th. I decide to model the app on state of the art Android architecture for Model-View-Presenter.

The sample app for it is on Github. The main documentation page has a paragraph which is very confusing, until I realize that it contains a typo, which I send a pull request to fix. This is not an encouraging start.

May 2, 2017

GridView out, RecyclerView in. Wallpapers are now over 1000, so we need to start recycling views better if users want to scroll down into infinity.

Also, Android Universal Image Loader always served me well, but it has not been updated for eighteen months, and image libraries like Picasso, Fresco and Glide are what the majority of shops are using now. I choose Glide, which has been a suitable choice so far.

May 3, 2017

I start working on the Presenter part of the Model-View-Presenter. I get how this works - the View Fragment and the Presenter both implement off of a contract interface. This way, transactions between the View and the Presenter are made very clear (and testable).

May 6, 2017

I use Retrofit to grab the JSON, and GSON to turn the JSON into POJOs. Retrofit has a ready-made GSON converter. This all makes the code cleaner.

May 13, 2017

I fiddle with Glide's disk caching strategy, so that thumbnail images will tend to only have to be downloaded from the server once.

May 18, 2017

Glide has lots of animations, plus the Recylerview blinks when the data set is changed. I work to minimize this. This is still not totally done, as I have not taken advantage of ranged data notifications to the adapter yet.

June 3, 2017

Trouble with FragmentPagerAdapter. Sometimes a new Fragment is created for an existing tab, whereas the old one comes back to life as well. I start dealing with this. I still don't feel it is totally dealt with, although I can not see any problems it is causing now. I try lots of things with retained fragments, FragmentStatePagerAdapter etc.

June 7, 2017

The code from April 23rd to June 3rd was getting a little convoluted, so this refactoring gets a refactoring. I try to take out all the little kludges to get things working and streamline things sensibly.

June 9, 2017

A real breakthrough. The main data structures are resident in the Model repository. When the app starts up, I pull a reference down to the View's adapters of the relevant needed data structures that reside in the Model repository. That is the first and last time the data structures are referenced - on subsequent updates, all the actual work is done in the Model repository, and the current View adapter is just sent calls that do a notifyDataSetChanged. Very clean (cleaner yet would be ranged notify to the adapter).

June 12, 2017

The todo list is getting shorter and shorter. I decide to punt on ranged notifies, even though it causes the grid to blink on data notifies, particularly on my oldest Android device.

I want the app to open, to do a small JSON grab of the most recent wallpapers, and for those images to be put in Glide and loaded. I want the user to quickly see something. That is the priority, everything else follows. So the first JSON load does not send an app InstanceID to the server. Previously the order had been first JSON pull -> load images -> load InstanceID -> do second JSON pull. Now I do the first JSON pull, and kick off a Runnable to send the InstanceID to the Model repository. Retrofit grabs JSON without InstanceID's until it loads. So the user is not inconvenienced. It works out well. The app is architected well enough, and with clean enough code that these little extras don't really affect things. Timely InstanceID's are nice to have, but not critical.

Why do I send InstanceID's to the server? Because it helps with bug tracking. Some users are having a problem, but tracking by IP does not cut it as their ID's change a lot. Even tracking by device does not cut it as some devices are fairly common. If I get a low rating on Play on a certain day, I look through the server logs for that device type, country etc. Two people who gave me a one rating loaded the JSONs, but no wallpapers, which helped me track down a bug.

So we're coming into the home stretch. I do a production build. Oops. Guava and Firebase libraries conflict. That's simple enough, I don't have much Guava code in the app. I rip the small amount of Guava code I have out.

I QA on my devices. I should probably do more QA, but it's been three months and I am antsy, and this can go on forever. I thought I was releasing a few weeks ago, but QAing kept catching problems. So I release to alpha, and then beta. One of my beta testers says it is all good. The Google Play developer console automatic tests go through fine. So I release to 25% of my users. Later on, I go out. I check the app on a public wifi network which is flaky. Oops, my detail page is messed up. The detail image appears, then disappears. Sometimes it is replaced by a better image a few seconds later, sometimes it is not replaced at all.

June 13, 2017

I fix the error. The higher resolution thumbnail is loaded to a file by Glide, and when that is all copacetic, it refreshes the existing lower resolution (RecyclerView grid) thumbnail, with the existing ImageView's Drawable serving as Glide's placeholder. This seems to work. I QA it for a bit and then release - to 50% of the app users.

There is still more QA I want to do. My main thing right now is that the app is functioning properly. I am most concerned with how the logic is dealing with network latency and flaky networks. As well as other problems that might crop up. Once I feel the app is mostly stable, I can concentrate on other enhancements. Of course, a regular addition of new wallpapers will go alongside this.

It's been a year and three months, and I haven't really promoted the app heavily. It has over 3500 active users, and a 4.3 rating, but I am concerned with the users which give it ratings from 1 to 3. I am concerned with bugs like TransactionTooLargeException. I am concerned with users who have more latency than I deal with (I do some things in QA to test this, but can do more). On top of these stability questions, the app only has 1371 wallpapers, whereas the main competitors have many more. If the app is stable, I can concentrate primarily on adding more wallpapers. After enough wallpapers are added, it would make sense to put in search functionality, which is the main emergent feature which the app could use.

So I will see how this update fares, perhaps do some more small fixes, and if things are going well, may start ramping up the marketing budget some. I previously was targeting English speaking countries and Spanish speaking countries. Currently I am targeting French speaking areas, and will soon be switching to primarily targeting German speaking areas. If everything is stable, and a few new fixes go in, I may ramp up marketing efforts, even if it is just to see how users respond to the app.

I should mention in closing that in April, another effort went into the app framework. I paid someone else to pick three wallpapers a day throughout April. I also paid a Python programmer to speed up the process I had to thumbnail images I had selected. Both people did a good job, and I may work with both again.

Wed, 28 Dec 2016

So having tried out the HTC Vive two weeks ago, I decided to go to Best Buy and give the Oculus Rift a try.

The Microsoft Store had more of an area set out for the Gear demo, the Rift area was smaller and not partitioned off. The demo guy worked for neither Best Buy nor Facebook/Oculus, but for a third party - but was more connected to Facebook/Oculus than Best Buy.

The setup had the Rift, a sensor, Rift headphones, and the new Oculus Touch controls. I put on the headset and then the touch controls. Like the Vive, you can see your virtual hands in front of you. You begin in something like a hotel lobby which is a waiting area of sorts. Then you're put on the edge of a skyscraper, and can look over the edge. I definitely had some visceral feeling of vertigo doing that. You're also put in a museum with a rampaging dinosaur, which looked real enough. You also get to watch two little towns operate. You also meet an alien. I believe this is the "dreamdeck" demo, although I don't recall seeing robots.

Then you're in an empty VR room and you learn how to use the touch controllers. Then you can choose what app to use - I chose Medium, a sculpting app. It's cool, you choose if you're left or right handed. Then the left hand does things like undo the last thing you did with your right hand. You get to sculpt a 3d tree. Your right hand keeps transforming from one tool to another - first you place your tree upright, then add branches, then add more bulk to the trunk, then sculpt down the branches, then add leaves etc. 3D sculpting. Your right hand transforms from one tool to another depending on the job.

In my experience, the Vive felt more like 3d, the Rift felt a little more like I was looking at two screens. Although as I adjusted the headset it felt less like that - I'm not sure if that was the Rift or me just not tightening it properly.

One nice thing about the Vive demo was some of it was a little less guided - I could move around and manipulate what I wanted. The Oculus demo was guided each step.

Still it was pretty awesome. This is just the first generation, they'll get better and cheaper as time goes on. VR is obviously already here for early adopters, with killer apps and cheaper and better hardware it will take over the video game market.

Thu, 15 Dec 2016

So today I went down to the Roosevelt Field shopping mall. I saw Microsoft had a store there, and often I would just walk by, but I decided to see what they had there.

Among the various devices were boxes with Oculus Rifts and HTC Vives in them. They even had the HTC Vive set up for a demo. I have of course been hearing a lot about VR since the Oculus Rift Kickstarter kicked off in 2012 (actually I've been hearing about it before that even). I've never tried the Vive or Rift though. Actually the Rift's hand controllers, Oculus Touch, just came out last week, so I'm not all that late in this.

It was quite amazing. There's been a number of times in my life that I have seen a new piece of technology - a PC, a modem, a Unix box on the Internet, a web browser - and it was immediately obvious how impactful this technology would be on the world. VR in the Vive was one of those experiences. Seeing it you can foresee the massive changes this new piece of technology will engender.

One thing a lot of people who have seen this have said is you have to see it to understand. You can explain it to people - but people won't really have an understanding of it until they use it. Because it is so visceral. It definitely has the "presence" within the immersion that people talk about.

I didn't realize how interactive it was. I walked around, I was under the ocean looking at fish, whales and a sunken ship, I picked up objects, I picked up mallets and played Mary Had A Little Lamb on a xylophone in a wizard's workshop, which then played over the store's speakers.

When I took the headset off after a few minutes I experienced what some have discussed. It was slightly disorienting. My central nervous system said - how did you get from the bottom of the ocean to wandering around this mall so quickly? It's a signal of just how this relatively inexpensive and relatively portable system really has finally got immersion presence right.

Sat, 03 Dec 2016

My Wallpapers Android app, and the last 10%

So "version 2" of my Wallpapers app for Android went out on June 30th. I then looked to make improvements. I put the category name on top of the category page. I adjusted text size by the screen dpi display. I removed image margins for image details. I also did some UTF-8 fixes, as I wanted to start moving into the international, non-English exclusive market. These changes done I release "version 3" (technically 7) on July 23rd.

Continuing on non-English languages, I then did many more Android side and server side changes to handle other languages. I paid to have the app translated into Spanish, French and German. Many of the pictures already had multi-language blurbs and/or tags. I upgraded the gradle version. I fixed the button and button text size. I added more licenses for the pictures being used. I added functionality so categories could survive screen rotation like non-category grids. Google seemed to be sidelining Google Analytics and pushing Firebase, so I tore Analytics out and put some Firebase in. And then - release of "version 4" (8, technically) on September 16th.

I had done the foreign languages because it seemed as if it was time to expand beyond English-speaking countries. People used the app, rated it well, came back to it to check for new wallpapers. I thought I had done enough QA on it. So three language translations were paid for, and on push-out of "version 4", I began doing heavier paid promotion of the app.