About

Android, Linux, FLOSS etc.

Code

My code

Subscribe

Subscribe to a syndicated RSS feed of my blog.

Thu, 16 Mar 2023

I have been using GPT-3, and GPT-4 just came out. Finding this interesting, I downloaded LLaMA. I have LLaMA on my desktop. There is a gradio, Automatic1111-like text generation web UI that lets large language models be targets. Normal LLaMA does not work for my desktop's Nvidia RTX 3060 with 12G VRAM, so I got 4 bit models, which may be substandard, but what can I do? LLaMA comes in different parameter sizes: 7B, 13B, 30B and 65B. My Nvidia card can handle 13B parameters, but my Nvidia card conks out when trying to do the 30B parameter one. The model is 25 gigs large. Reminds me of how Marvin Minsky used to say that when AI is really figured out, it will be able to be run on a typical home PC.

So with the text generation web UI I am now talking with LLaMA, which is more interesting than talking to Eliza.

Common sense questions and answers

Question: How many legs does a dog have?

Factual answer: 4

Question: What does the German word "unbehagen" mean in English?

Factual answer: It means discomfort, unease.

Question: Who wrote a letter to the Corinthians circa 53 AD?

Factual answer: Paul of Tarsus.

Question: What is 22+33?

Factual answer: 55

Question: What is the astrological sign of someone born March 16?

Factual answer: Pisces.

Question: Who designed the movie poster for the movie "Vertigo"?

Factual answer: The designer was Bill Gold.

OK, finally got him. Saul Bass designed the movie poster for Vertigo (Bill Gold did design the posters for other Hitchcock movies, like "The Wrong Man" though). What does ChatGPT say (I am pointed to GPT-3, not paying for GPT-4 currently).

Who designed the movie poster for the movie "Vertigo"?

The iconic movie poster for the film "Vertigo" was designed by the

renowned graphic designer Saul Bass. Bass was known for his innovative

and memorable movie posters, as well as his work in title sequences and

corporate logos. The poster for "Vertigo" features a stylized image of a

woman's face and swirling, vertigo-inducing shapes, which perfectly

captures the mood and themes of the film. The poster is considered a

classic of graphic design and is still widely recognized and admired

today.

Thu, 13 Oct 2022

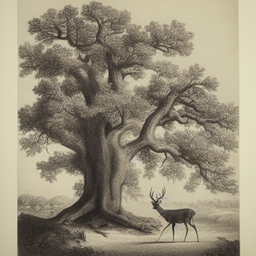

On August 31st, Stable Diffusion was released. It is a computer program where you tell it what image to create and it creates it. So you say "tree" and it will give you a picture of a tree. You say "oak tree" and it will give you a picture of an oak tree. You say "oil painting of an oak tree" and you will get an oil painting of an oak tree. You say "lithograph of an oak tree" and you will get a lithograph of an oak tree. You can say "lithograph of an oak tree and a deer" and get this -

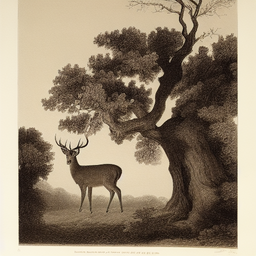

You can ask for it to give you some alternatives, in which case you can also get another try at it like

It is pretty simple to use - you tell it what image you want to see and it will attempt to give you that image.

It can do other things as well - if you like part of a picture it draws, but not another part, you can erase the part you don't like ("inpainting") and tell it to try something else. If you like a picture but want to see the surrounding envisioned scenery, you can ask for that as well ("outpainting"). If you give a text prompt and ask for some alternative pictures, and like one particular one, you can ask to make more pictures to look like that one. You can also do all of these things - inpainting, outpainting, image-to-image - with already existing pictures it did not create.

Stable Diffusion was released for free, and the source of the software is available (as are the pre-trained weights of its model). I am running it on my desktop at home. It can be run on a server on the Internet as well (hopefully one that has one or more GPUs).

Programs like this have been developed by other places in the past two years - DALL-E by OpenAI, Imagen by Google, Midjourney. There has been some limited access to them as well, although sometimes only by invite. Stable Diffusion just dropped this so that everyone can use it, and I am sure it will speed the adoption of deep learning.

AI

To go a little back into the history of all of this - in 1956 John McCarthy, Marvin Minsky, Claude Shannon and Nathaniel Rochester held a workshop on "artificial intelligence" for a study - "The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it."

Around this time, two schools of thought arose on how to do artificial intelligence - symbolic AI and connectionist AI. One idea in symbolic AI is that there are facts, and with a set of facts, one can use logic to come to conclusions. Symbolic AI is fairly understandable - you can understand and explain how a computer reasoned about something.The other school of thought in AI was connectionism. The idea in connectionism was to model the computer on the human brain - our brain is neurons which are connected, and connectionist AI created artifical neurons and wired them together. Connectionism has a focus on learning - data is sent through the neural network, and can be "remembered" later. Stable Diffusion is more in the connectionist school, which is the machine learning, deep learning school.

The connectionist school had some successes in the early days, but the 1969 book Perceptrons pointed out flaws in some things being done back then, which may have caused interest in connectionism to wane somewhat, and the success of some GOFAI type expert systems in the 1980s also favored that school over connectionism. However for the past ten years the connectionist deep learning school has seen a resurgence, and is in use at most of the big tech companies, and Stable Diffusion is part of this trend.

Stable Diffusion

Stable Diffusion uses a model to do what it does, and it cost about half a million dollars to train this model. The model is 4 gigs large on my computer, and it's amazing what can I can generate on my home computer with that 4 gig model. It's truly mind-blowing, and gives some insight into how the brain works and what computers will be able to do in the future. Stable Diffusion generates a lot of great stuff, but has trouble trying to generate some things (like a sign that says "WARNING" or something like that, or human faces in some cases). As time goes on, it an other systems will just get better.

You can download Stable Diffusion to your computer, but I know what I'm doing and had some difficulty getting it working. People are at work to make it simpler to do. You do need a decent graphics card. There are also forks of Stable Diffusion which may be simpler to install, or offer more features. If you think you can handle it, you can get Stable Diffusion here.

Probably easier is to just go to a website that takes text prompts and generates images, like Stable Diffusion web Dezgo Night cafe

Fri, 27 Jul 2018

Python Scikit-learn and MeanShift, for Android location app

I am writing a MoLo app (Mobile/Local) which might even become a MoLoSo app at some point (Mobile/Local/Social).

Any how, the way it works right now is it runs in the background, and if I am moving around, it sends my latitude and longitude off to my server. So I have a lot of Instance IDs and IP addresses, timestamps and latitude and longitudes on my server.

How to deal with taking those latitudes and longitudes and clustering them? Well I am sending the information to the database via a Python REST API script, so I I start with that. I change the MariaDB/MySQL calls from insert to select, and pull out the latitudes and longitudes.

The data I have is all the latitude/longtitude points I have been at over the past four months (although the app is not fully robust, and seems to get killed once in a while since an Oreo update, so not every latitude and longitude is covered). I don't know how many clusters I want, so I have the Python ML package scikit-learn do a MeanShift.

One thing I should point out is that in the current regular fast update interval for the app, I only send a location update if the location has changed beyond a limit (so if I am walking around a building, it will not be sending constant updates, but if I am driving in a car it will).

Scikit-learn's MeanShift clusters the locations into four categories. Running sequentially through the clusters, doing a predict, the first category starts with 2172 locations. Then 403 locations for category two. Then 925 locations for category three. Then another 410 locations for category two. Then 4490 locations for category one again. Then 403 locations for category four. Then 2541 locations for category one.

The center of the first cluster is about half a mile west and a quarter mile south of where I live. So I guess I spend more time in Manhattan, Brooklyn and western Queens than in Bayside and Nassau.

The center of the second cluster was near Wilmington, Delaware. The center of the third cluster was Burtonsville, Maryland.

It's due to the aforementioned properties of the app (I only send a location update if the location has changed beyond a small distance limit) that I had 2172 locations from March 12th to April 2nd in one clustrered area, and then on April 2nd - 1738 locations in two different clustered areas. On April 2nd I drove to and from my aunt's funeral in Maryland. That trip created two clusters - one in Wilmington for my drive there and back, and one in Maryland where I drove to the church, the graveyard and to the lunch afterward.

So then I have another 4490 location updates in cluster one, until those 403 updates in cluster four. The center of that cluster is Milford, Connecticut, and it revolves a trip I made to my other aunt's house near New Haven, Connecticut from May 25th to May 26th. Then it is another 2541 updates back in cluster one.

So...I could exclude by location, but I could also exclude by date, which is easier. So I exclude those three days and do MeanShift clustering again. Now I get six clusters.

Cluster one is centered about five blocks east, and slightly north of where I live. It has the bulk of entries. Cluster two is centered in east Midtown. Cluster three is centered near both the Woodside LIRR station and the 7 train junction at 74th Street. Cluster four is centered in Mineola, Long Island. Cluster five is centered south of Valley Stream, with 200 updates in three chunks. Cluster six is in Roosevelt, Long Island and only has one update.

MeanShift is good, but I may try other cluster types as well.

Sat, 21 Mar 2015

Preparing for Artificial Intelligence and Machine Learning

I took a class in AI in late 2013, but I only started looking at practical engineering implementions for ML in the past few months.

In looking at things like scikit-learn, I saw that a lot of the algorithms are already coded. You can even automatically test what classifier/model will be best for the data. In looking at the package and examples, I suspected that the hard part was wrangling the in the field data into an acceptable form for the algorithms.

I was graciously invited to an event a few months ago by a fellow named Scott, at which there were several people with good field knowledge of AI and ML. I talked to two of them about algorithms and data. Both of them made the point that getting the data wrangled into a suitable form was the hard part. I then went onto the net and read about this more carefully, and others with experience seemed to agree. So it is like other programming, where getting the data structures and data input right is usually the hard part, since if that is done well, implementing the algorithms is usually not much of a chore.

So I began working on my ML project. What does it do? Sometimes I go to local supermarkets, and what I am looking for is out of stock. So this ML predicts whether the supermarket will have the item I'm looking for in stock.

I architected the data structures (which consists of purchases, and observations that certain products are missing) and programmed the inputs. Then I added Google Maps so I could see where the local supermarkets were. The program would prefer close supermarkets to far ones.

Now I have run into a problem/non-problem. In architecting the solution so that the ML models and algorithms could better understand the problem, I architected a solution so that I could better understand the problem as well. Before I would pretty much go to my closest supermarket, if they were out of stock then on to the next closest one, and so forth. Now I have all that data available on my Android, including a map, and deciding which supermarket to go to is trivial. I don't need the ML so much any more. I wonder how often this happens - you build a solution so that AI/ML can be used, but once all the data is recorded in an understandable way, you don't need the AI/ML any more. Although there can be situations where there is a lot of data for someone to remember in their head, but not a lot for an ML solution.

Any how, I went through enough trouble to put all of this together, that I will still go through with writing a program that predicts if the items I want are in stock. I'll also make a map with time/distance information between my home and the supermarkets, and the supermarkets with each other. Then my program will give me advice on which supermarkets to try first.